Meta incrementality testing: finding real marketing lift

- 1. What Meta and Facebook incrementality tests actually measure

- 2. Why platform-only incrementality creates blind spots

- 3. Interpreting Meta’s lift data through a business lens

- 4. The role of cross-channel interactions and halo effects

- 5. Beyond Meta: building a holistic incrementality framework

- 6. How fusepoint connects platform lift to real business impact

Only 8% of marketers use incrementality testing, even though 41% report struggling to measure ROI accurately. That gap between attributed performance and true lift is exactly why incrementality testing exists.

Meta has done more than any platform to push incrementality into the mainstream. Its conversion lift studies give performance marketers a way to ask a better question than “Did this ad get a click?” But too often, those results are taken at face value and treated as definitive proof of business impact.

Meta’s experiments are designed to measure in-platform incremental lift, not total revenue impact across the full media mix. This fact makes incrementality experiments powerful, but only when it’s interpreted in context, pressure-tested against other channels, and connected back to real revenue outcomes.

What Meta and Facebook incrementality tests actually measure

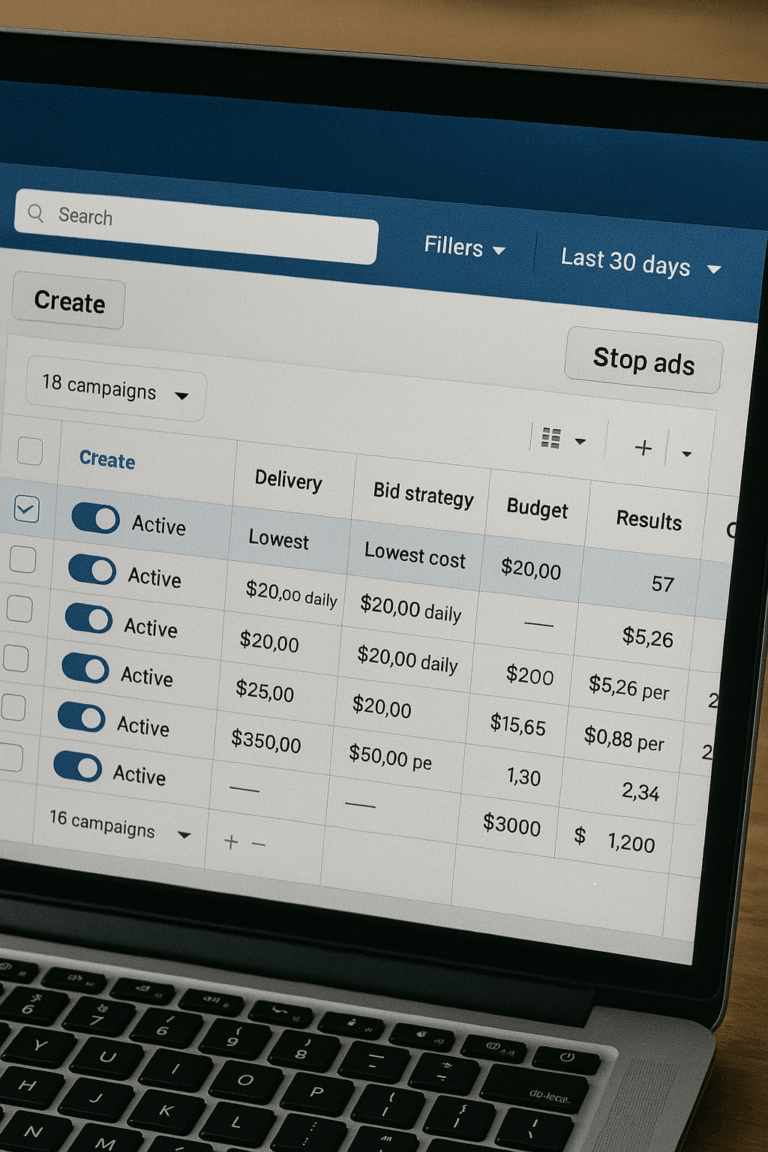

At a technical level, Meta incrementality testing is a randomized controlled experiment.

A portion of users is withheld from seeing ads (the control group). Another portion is exposed (the test group). Facebook incrementality testing then measures the difference in conversions between the two groups to estimate lift.

That design eliminates attribution uncertainty and addresses a clean causal question. But there are important boundaries to understand.

-

The measurement lives entirely inside Meta’s world. Lift is calculated based on conversions Meta can observe and attribute, typically on-platform or directly trackable downstream actions.

-

The outcome is limited to Meta exposure. The test does not measure how advertising influences search behavior, direct traffic, retail sales, or repeat purchases that occur later or elsewhere.

-

Time horizons are short. Most conversion lift studies focus on immediate or near-term actions rather than lifetime value or retention effects.

Why platform-only incrementality creates blind spots

When platform lift is treated as business lift, three problems tend to arise.

-

Cross-channel effects disappear

Meta ads often influence behavior that shows up elsewhere. A user may see an ad and convert later via branded search, direct traffic, or retail. That downstream demand is real, but invisible to Meta’s test.

-

Offline and delayed value gets ignored

In categories such as insurance, automotive, and omnichannel retail, conversions may occur days or weeks later, often offline. Platform-only lift systematically understates (or misallocates) that value.

-

Budget decisions become locally optimal

Optimizing ad spend based on Meta-only lift can divert budget from channels that drive demand but don’t “own” the final conversion. Over time, this shrinks the top of the funnel while performance metrics look healthy.

This is how teams end up with dashboards full of lift and businesses starved of growth.

Meta’s incrementality isn’t flawed; it simply addresses one slice of the problem. True incrementality requires stitching those slices together.

Interpreting Meta’s lift data through a business lens

Meta’s lift results answer a narrow question well: Did ads on Meta cause more conversions inside Meta than not running them at all?

The mistake is assuming that the answer translates directly into business impact.

To use incrementality testing (Facebook specifically) effectively, teams need to step back.

Start by treating lift as a component, not a verdict

Meta’s reported lift shows incremental activity within its own system. That’s useful for comparing campaigns or platform formats, but it’s not a final read on ROI.

A lift study can show strong incremental conversions, but total revenue may remain flat or decline if spend is pulling demand forward or cannibalizing other channels.

Validate lift against independent data

The fastest reality check is outside Meta’s walls. Compare lift periods with changes in:

-

CRM signups

-

First-time purchasers

-

Repeat-purchase rates

-

Offline sales

If Meta lift rises but downstream metrics don’t move, the incrementality is likely narrower than it appears.

For example, a brand might see a 12% lift in Meta-attributed conversions during a test. But CRM data shows no increase in new customers and no change in total orders. That suggests the ads influenced attribution paths rather than net demand.

Compare Meta lift to other channels running at the same time

Incrementality does not exist in isolation. If paid search, email, or CTV spend is also changing, Meta’s lift may reflect broader demand creation rather than platform-specific impact. Without this context, Meta can appear more effective simply because it sits closer to the point of conversion.

A common pattern is Meta retargeting showing a strong lift during a brand campaign. In reality, awareness media created the demand; Meta simply captured it.

Reframe lift in revenue terms, not conversion counts

Conversions are a proxy. Profit is the outcome. As such, it’s crucial to translate Meta’s lift into incremental revenue, margin, and payback. A lift that produces low-value, discount-driven customers may look strong in-platform but weaken unit economics.

The goal is to place Meta’s data in its proper place: as one signal within a larger measurement system that reflects how the business actually grows.

The role of cross-channel interactions and halo effects

Here’s a common pattern seen in real marketing systems:

-

A user sees a Meta video ad.

-

They don’t click.

-

Two days later, they search the brand on Google and convert.

Incrementality testing on Meta may show modest in-platform lift, and search attribution looks strong. But neither system, on its own, captures the full causal chain. Awareness was created in one channel, while conversion was captured in another.

Performance channels capturing credit

Retargeting is another frequent blind spot. Display or social ads create intent early, while retargeting or branded search harvest demand later.

When lift is measured only at the point of conversion, upstream influence disappears. The result is systematic overinvestment in “closer” channels and underinvestment in the channels that actually create demand.

For example, Meta retargeting campaigns often show strong incrementality in-platform because they sit closest to the conversion moment. But those users were warmed by email, content, affiliates, or offline activity long before the Meta ad appeared.

Without accounting for those interactions, lift gets misattributed. The last visible nudge receives credit for demand it didn’t create.

The risk of double-counting lift

When each platform runs its own incrementality tests in isolation, the same conversion can appear “incremental” in multiple places, such as Meta, search, and email.

Each of the three can be directionally correct. Together, they can exceed 100% of reality.

That’s the halo problem: Not that halo effects exist (they always do), but that they’re invisible unless you measure across channels instead of inside them.

Beyond Meta: building a holistic incrementality framework

Advanced teams don’t stop at platform experiments. They use them as inputs into a broader system.

Market-level experiments

One way to break out of platform silos is geo experimentation. Instead of testing users inside Meta, you test markets:

-

Turn Meta off in a set of regions.

-

Hold everything else constant.

-

Observe changes in total revenue, search demand, and downstream performance.

Many brands rely on outdated attribution models that over-credit certain marketing tactics—leading to inefficient spending and missed growth opportunities. Our guide breaks down how to measure true marketing impact using incrementality testing, so you can make data-backed decisions that drive revenue.

PDF

PDF

This captures spillover effects that platform tests cannot see.

Control markets and time-based holdouts

Time-based holdout testing can serve a similar purpose. Temporarily pausing Meta activity while holding other variables constant reveals whether demand persists or fades. This helps separate true incremental lift from demand that would have occurred anyway.

Incrementality results become more reliable when they’re pressure-tested against independent models.

If Meta’s lift study indicates a strong impact, media mix modeling (MMM) should reflect a corresponding movement at the market level. If it doesn’t, that’s a signal that the lift may be narrower or shorter-term than assumed.

Estimating blended ROI

Finally, results are normalized across platforms. Meta, Google, CTV, and other channels are evaluated on the same basis: incremental revenue, margin impact, and payback period.

This is how incrementality moves from a platform feature to a decision system.

How fusepoint connects platform lift to real business impact

While platform lift answers a narrow question, true business impact demands a broader one.

Incrementality tests from Meta or Google can tell you whether ads caused additional conversions inside that platform. What they can’t tell you, on their own, is whether those conversions mattered to the business once you account for cross-channel influence, customer value, and profit. That gap is where most incrementality programs fall apart.

fusepoint treats platform lift as evidence, not a verdict.

-

The process starts by grounding lift results in reality. Platform experiments serve as inputs to a broader measurement system that includes channel incrementality testing, marketing mix modeling, and profitability analysis.

-

This allows the experts at fusepoint to ask harder questions: Did Meta lift translate into incremental revenue, or merely shift demand from search? Did Google lift improve customer quality, or accelerate conversions that would have happened anyway?

-

From there, the team connects lift to financial outcomes, aligning experimental results with CAC, CLV, margin, and payback, so leadership isn’t left debating conversions.

-

When lift doesn’t survive that scrutiny, you can trust fusepoint to say so. When it does, we help you scale it.

The result is a unified measurement system that holds up outside the platform UI. It separates correlation from causation, and short-term signal from long-term value.

Incrementality testing is powerful, but only when it’s part of a system built to answer the questions that actually matter. marketing performance Measurement consulting is what fusepoint helps teams with, that turns platform data into decisions leadership can stand behind.

Sources:

Supermetrics. The 2025 marketing data report. https://discover.supermetrics.com/marketing-data-report-2025/?utm_source=event&utm_medium=event&utm_campaign=EUR_PTR_TXT_DXY_EXY_FXY_ZXY

Facebook Business. About incrementality when running tests on Meta technologies. https://www.facebook.com/business/help/974558639546165

arXiv. Designing Experiments to Measure Incrementality on Facebook. https://arxiv.org/abs/1806.02588

ResearchGate. Cyber-Driven Advertising: The Impact of META Promotional Ads on Consumer Purchase Intent in the UK Retail & Fashion Sector. https://www.researchgate.net/publication/389868181_Cyber-Driven_Advertising_The_Impact_of_META_Promotional_Ads_on_Consumer_Purchase_Intent_in_the_UK_Retail_Fashion_Sector

Our Editorial Standards

Reviewed for Accuracy

Every piece is fact-checked for precision.

Up-to-Date Research

We reflect the latest trends and insights.

Credible References

Backed by trusted industry sources.

Actionable & Insight-Driven

Strategic takeaways for real results.